AI rarely enters an organisation through a strategic decision.

More often, it arrives quietly, through individuals experimenting with tools that make their work a little easier: rewriting emails, summarising meetings, generating first drafts or analysing datasets.

Each action is small and practical.

Yet these small steps represent a shift in how work gets done long before leaders realise the extent of the change. This bottom-up pattern mirrors what we described in The Invisible AI Revolution: AI embeds itself not through grand announcements, but through steady, unnoticed integration into everyday tasks.

This early experimentation is healthy. It reflects curiosity and a willingness to improve.

But when AI adoption grows without structure, its impact becomes uneven. Some teams accelerate rapidly, gaining efficiency and producing more polished outputs, while others lag behind.

Instead of a single organisational rhythm, two emerge: one enhanced by AI and one unchanged, creating variability in the quality, speed and consistency of work. According to McKinsey’s latest global survey, AI adoption is increasingly driven by individuals and small teams, meaning this drift is now the default pattern in most organisations.

The Risks of Unmanaged Adoption

The most visible outcome of unmanaged AI adoption is inconsistency.

When one department uses AI to accelerate reporting while another takes a more traditional approach, leaders receive information that differs not just in content but in format, tone and depth. Comparing these outputs becomes difficult. Confidence in their reliability may also vary, particularly when the influence of AI is not disclosed.

A second, more subtle risk is the loss of transparency.

When AI is used informally, leaders cannot easily see where automation played a role, how information was produced or whether quality checks were applied.

This uncertainty can erode trust, especially when outputs influence decisions. Over time, these inconsistencies can undermine organisational coherence, something strategy leaders work hard to protect.

Risk also grows in the shadows.

Without guidance, teams may inadvertently rely on outdated models, hallucinated content or biased outputs. Not because they are careless, but because they lack clear standards.

In parallel, cultural divides can emerge: AI-confident teams push ahead, while others feel uncertain or excluded. None of these issues stem from AI itself. They arise from an absence of direction.

Why AI Leadership Must Sit Within the Strategy Function

Some organisations instinctively place AI leadership within IT, assuming technical expertise is the primary requirement.

While IT plays an essential role in security, data protection and tooling, the real influence of AI lies in how it shapes decisions, workflows and performance. Areas that are inherently strategic.

Strategy Directors are already responsible for alignment, coherence and the frameworks that give structure to organisational performance. AI now touches each of these domains.

As AI influences the pace of analysis, the structure of reporting and the quality of insights, it becomes part of the organisation’s strategic machinery. Allowing it to develop without strategic input creates unnecessary misalignment.

Positioning AI leadership within the strategy function ensures that the technology strengthens the organisation rather than fragments it.

Strategy leaders bring the perspective needed to integrate AI into objectives, KPIs, governance and communication.

They ensure that AI adoption is purposeful, consistent and aligned with long-term goals.

Crucially, they create the conditions for safe and confident innovation rather than restricting experimentation.

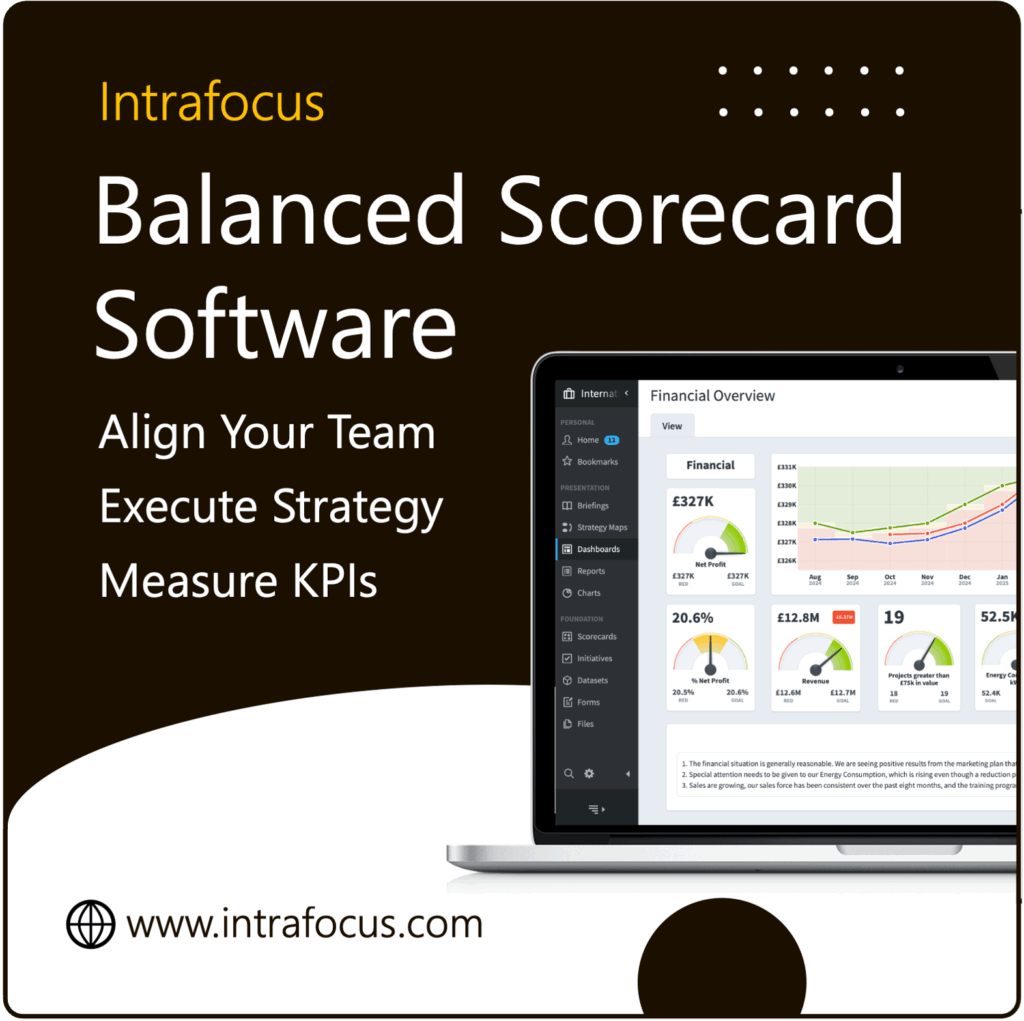

Related: Strategy Scorecard Software from Intrafocus, the official EMEA supplier of Spider Impact.

Turning Scattered Experiments into Organisational Capability

For AI to move from personal productivity tool to organisational asset, it must evolve into capability. Capability requires coherence: shared intent, standards, training and measurement.

The first step is clarity.

Organisations need a defined purpose for AI: why they are using it, where it adds value and how it supports strategic outcomes. Clear expectations remove ambiguity and give teams a sense of direction.

Standards then bring consistency.

These might cover acceptable use, human oversight, documentation, tone, data handling and quality assurance.

Standards do not diminish creativity; they make reliable innovation possible. They ensure that work produced with AI is trusted and comparable across teams.

Training expands this capability.

If only a handful of individuals feel confident using AI effectively, the organisation cannot claim meaningful adoption. Structured learning ensures that confidence grows evenly, reducing the risk of uneven performance.

Measurement completes the framework.

Tracking adoption, quality, time saved and output reliability provides leaders with insight into the value AI is delivering. These metrics naturally align with the Organisational Capacity perspective of the Balanced Scorecard, where investments in people, tools and processes are evaluated by their strategic contribution.

Integrating AI into the Seven Step Strategic Planning Process

AI creates the most value when it becomes part of the strategic planning cycle rather than a peripheral initiative. The Intrafocus Seven Step Strategic Planning Process provides a practical structure for achieving this.

Organisations begin by clarifying the foundation: defining AI’s purpose in relation to mission and values.

They then assess current usage, identifying both strengths and risks.

Next, they establish clear strategic objectives for AI that improve decision-making, enhance reporting accuracy or accelerate analysis.

KPIs translate these objectives into measurable performance indicators such as adoption rates, output quality or efficiency gains.

From here, structured initiatives bring the strategy to life. These might include training programmes, governance frameworks, pilot projects or technology investments.

Communication ensures consistency, enabling teams to use AI confidently and responsibly.

Finally, automation tools such as Spider Impact allow leaders to track AI objectives, KPIs and initiatives in one place, ensuring alignment and accountability.

In this model, AI becomes a core driver of strategic performance, not a series of disconnected experiments.

The Leadership Advantage: Directing AI with Purpose

Clarity gives leaders a powerful advantage.

When the organisation knows what AI is for, how it should be used and how success will be measured, the technology becomes a multiplier of strategic intent.

Decisions become quicker and more reliable. Reporting becomes more consistent. Teams work with greater confidence, knowing that their use of AI aligns with organisational expectations.

External frameworks such as the OECD AI Principles reinforce this direction by promoting transparency, accountability and responsible use. These principles complement internal strategy, strengthening the organisation’s ability to innovate safely and consistently.

Purposeful leadership reduces risk, encourages fair adoption and accelerates learning. It enables organisations to benefit from AI’s strengths without losing control of quality or coherence.

Strategy Directors as AI Stewards

As AI reshapes the way organisations operate, Strategy Directors occupy a pivotal position.

Their role is no longer to observe emerging AI behaviour from a distance, but to guide it, proactively, thoughtfully, and intentionally.

Stewardship means providing clarity, creating standards and ensuring alignment.

It means championing responsible innovation while protecting organisational coherence.

It means ensuring that AI enhances performance rather than introducing noise or inconsistency.

When Strategy Directors take on this stewardship role, AI becomes more than an invisible force shaping operational habits.

It becomes a strategic asset: deliberate, measured and aligned with the organisation’s long-term goals.

If you want to bring clarity to your organisation’s AI usage, align it with strategy, and measure its impact, Intrafocus can help. Our strategic planning workshops and Spider Impact platform provide the structure needed to turn AI experimentation into sustainable, aligned performance.